Heurisms to live by

For many horseplayers, handicapping is first of all a mental game. Of course it becomes very visceral and far from Platonic when you place your money on this horse or that, but I tend to think it’s the mental challenge that keeps you coming back.

Harvey Pack said horseplayers should keep a record. So I did. His joke, and it is not going to jump off the page as it does in his telling, is that, you ask a bettor how he is doing, and he will say “I am just about even” and you will know he is down. Pack said the emphasis of his own playing style was to enjoy the game and not lose money, which seems noble enough for my neighborhood.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

While studying the elements of handicapping style, the backdrop for these pages in the last year has been the considerable uptake in interest in Artificial Intelligence in the popular press and elsewhere. The link between technology and magic has been stated elsewhere by better than me, but the ability to predict the future -- and, let’s face it, that is one of the big promises of AI, machine learning, and handicapping art -- would really confirm technology’s magic power.

But I have been down this road before. Let me digress in that direction.

But I have been down this road before. Let me digress in that direction.

In fact, when I served in the hardware trade press one of my beats was neural networks. These flowered and then failed in the 1990s [what followed is now called ‘the AI Winter’].

New and improved, back from the dead and better than ever, they are at the heart of the AI and machine learning rebirth today.

In the 1990s the funding for this came from the Defense Dept, and that group’s disenchantment with the technology was in part behind its trip to the technology gulag. You see, the neurals could come up with decisions but they couldn’t tell you how or why. That was a problem when the major decision they were asked to perform was the launch of nuclear war. There were other reasons for neurals’ issues in the past, not the least of which paucity of data – the reverse of the problem today.

New and improved, back from the dead and better than ever, they are at the heart of the AI and machine learning rebirth today.

In the 1990s the funding for this came from the Defense Dept, and that group’s disenchantment with the technology was in part behind its trip to the technology gulag. You see, the neurals could come up with decisions but they couldn’t tell you how or why. That was a problem when the major decision they were asked to perform was the launch of nuclear war. There were other reasons for neurals’ issues in the past, not the least of which paucity of data – the reverse of the problem today.

So I watch machine learning developments, and try to learn what I can – but having seen this overhyped in the past, have one eye out for history to repeat.

Machine learning is probably not as easy as software vendors say.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Like horseshoes, car repair or what have you predicting outcomes is not as easy as it looks. A problem of machine learning is over fitting. That’s when a learning model function is too closely fit to a limited set of data points. Having many many factors is not necessarily a successful path to fruition, data scientists are learning, much as their horse handicapping brethren have over time.

Like horseshoes, car repair or what have you predicting outcomes is not as easy as it looks. A problem of machine learning is over fitting. That’s when a learning model function is too closely fit to a limited set of data points. Having many many factors is not necessarily a successful path to fruition, data scientists are learning, much as their horse handicapping brethren have over time.

This brings me to a brief consideration of 2016 book Algorithms to Live By by a Brian Christian and Tom Griffiths. It takes a look at computer science and the algorithms that drive programs, including machine learning functions. It takes a little further leap by suggesting that you could organize your life according to algorithms. I am not sure to what extent the writers may have taken some things from Column A (Good advice for making decisions) and crashed them for effect against things from Column B (how computers are programmed to do context switching, acknowledgement packetizing, interrupt handling, and so on). Here is a link to a cartoon used to promote the tome: https://www.youtube.com/watch?v=iDbdXTMnOmE. We’ll keep it simple here, focusing on the authors’ discussion of heuristics and overfitting. The write:

Overfitting - There are always potential errors or noise in data, they remark. Machine learning models can fit patterns that appear in the data, but these patterns can be phantom. This is well known in machine learning but there are always new learners that will learn it for themselves, maybe by screwing up on your dime, Mr. Businessman. To deal with some problems it is best to think less about them. p.151

The Lasso - Biostatistician Robert Tibshirani, they write, came up with an algorithm to cope with the over abundance of fits - it’s called the lasso and it applies penalties to weight different factors in order to set out which are the ones that have a truly big impact on the results of an equation. [p.161] These authors see analogies in nature: “Living organisms get a certain push toward simplicity almost automatically, thanks to the constraints of time, memory, energy, and attention.” Now we’re getting around to the part that moves their narration from algorithms to heuristics. The guidance is for the data scientist to abhor complexity, and conjure simplicity.

Early Stopping - "…considering more and more factors and expending more effort to model them can lead us into the error of optimizing for the wrong thing … that gives credence to the methodology around Early Stopping which is about simply stopping the process short so that it does not become overly complex. "

P 156 – Overfitting, they write, is a kind of idolatry of data, a consequence of focusing on what we’ve been able to measure rather than what matters. And this may resonate for machine logician, the Wall St programmer, the horseplayer.

The authors find analogies to the Lasso and Early Stopping in Charles Darwin. The great scientist is found, within his journal, weighing the pros and cons of his marriage to Emma Wedgewood - authors similarly cite Benjamin Franklin as one who pursued a “Moral or Prudential Algebra” comprising weighted pro and con lists for making decisions, which they see as akin to predictions. Darwin’s considerations are naturally limited to what can fit on the journal page (I recall Jack Kerouac limiting the length of his blues or haikus to the size of the pocket notebook page…), and the rumination ends with the resolver “Never mind, trust to chance.”

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Heuristics are more useful than detailed formulas, the authors of "Algorithms to Live by" write. And my experience here bears that out. This is what I found in the last year on this site that you have to come up with something that’s workable rather than something that’s perfect. This brings to mind a couple of old sages: "Perfection is the enemy of completion" [a jumbling of Voltaire], and one that I fully invented myself: "whatever we do is going to be doable."

The conversation with myself in this last year on this blog has been about systematizing handicapping. From the start the feeling (gained from reading the experienced experts) was that class, speed, form, distance and pace are the overarching key elements of a horseplayer’s handicapping heuristic. [Side note here: the best of work in that regard can be undone by bad wagering strategy. ]

Yet another side note: Google Home. As I composed this post, I turned to my trusty friend Google Home, which sits on my kitchen table and responds when I ask to hear Tampa Red, find out the score of the Red Sox game or learn about the weather in Louisville, Kentucky. “Hey, Google, What is the difference between an algorithm and a heuristic?” The answer blew my mind and follows here:

An algorithm is a clearly defined set of instructions to solve a problem, Heuristics involve utilizing an approach of learning and discovery to reach a solution. So, if you know how to solve a problem then use an algorithm. If you need to develop a solution then it's heuristics.

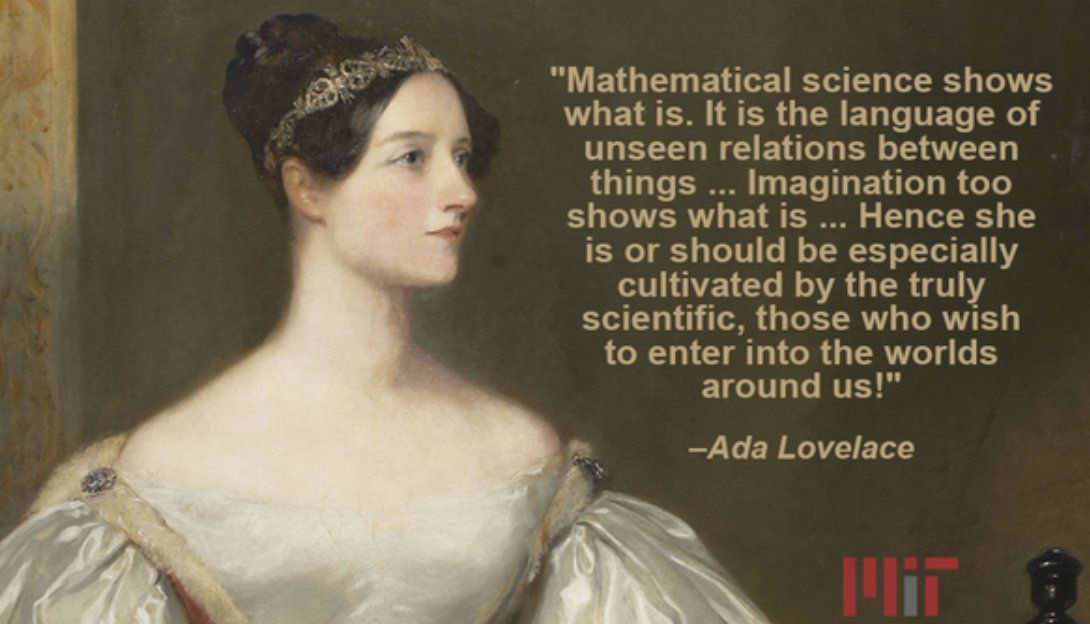

Today, the U.S. Senate, that August Body, declared “Ada Lovelace Day”. With the picture above we honor the first programmer, who was also in her day noted as a punter.

Comments